This past week and in my spare time over the semester I have been working on the action system. In an earlier post I described the action system as a relationship between the user, the assembly, and the actions that the assembly is capable of executing through its modules. When I went to implement this, I discovered it would not be as simple as it first appeared.

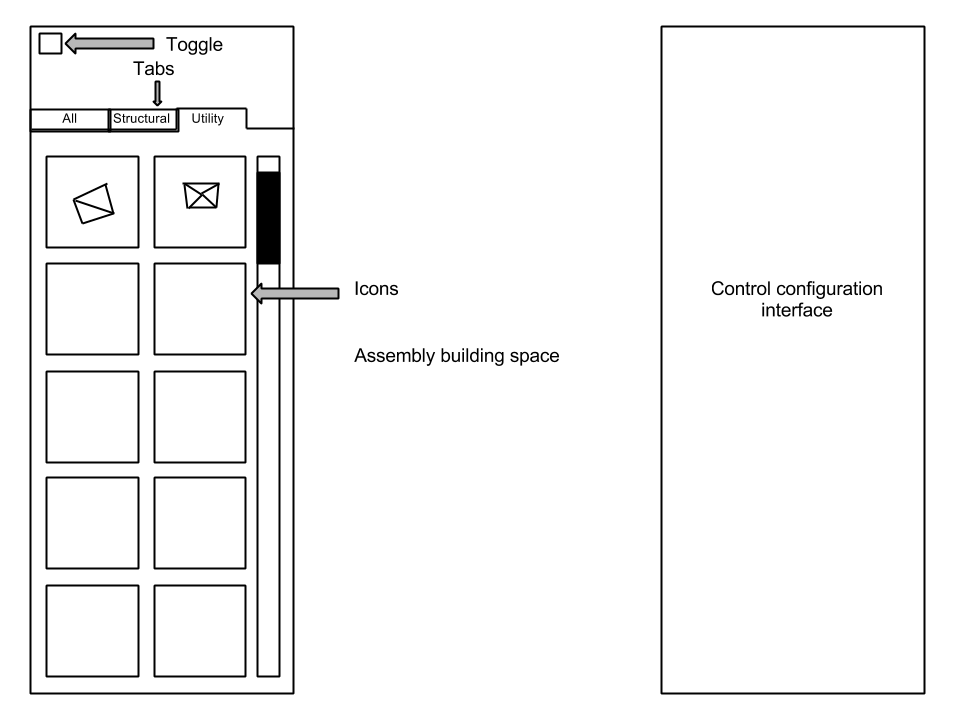

The action system was going to be broken into three parts: the easy to use simple logic system (this would allow direct designation of keys to specific actions), the logic/node editor (this would allow for very complex programming without the user writing any code), and the code editor (just like it sounds, you have to write code to program stuff).

The first part, the easy to use logic system, was going to be implemented as fast and easy as possible while still allowing for expansion. Then later on, when more of the game functionality was added, the second part would be implemented, which would allow the complex node/logic editing in addition to the simple keybinding. The two are unfortunately inseparable. In order to implement the first part, the second part (or at least the underlying infrastructure) must exist first.

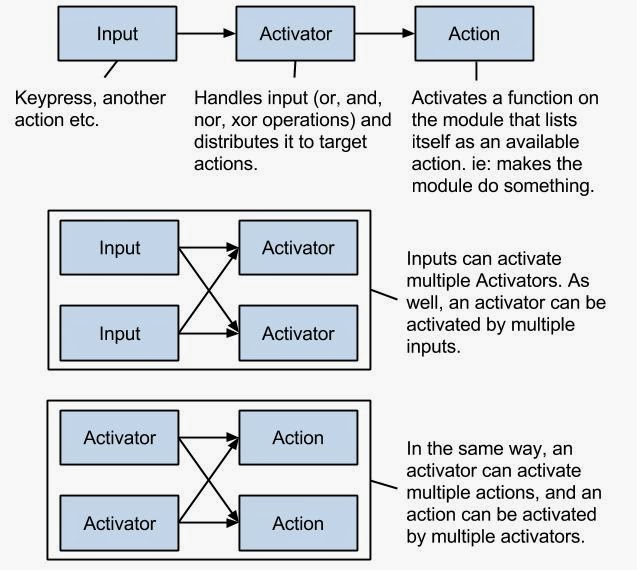

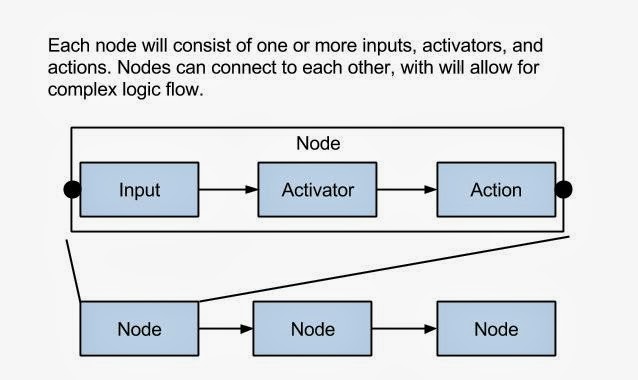

So this past week, and in my spare time over the semester, I have been working on the underlying infrastructure for the node editor. If you are familiar with programming, you can see how a node editor can be used to do logic. Each node in the editor will be broken into as many as three different parts: an input, an activator, and an action. The input is some sort of trigger or change, so you could use a variable change as well as a keyboard press as an input. Each input can be connected to one or more activators. As well, each activator can be connected to one or more inputs. The purpose of the activator is to handle the inputs and activate actions based on them. Each activator can also connect to one or more actions. The action is simply a link to a premade function within the code of a module. Anything passed to the action upon its activation, will also be sent to its target function. This target function can also be an input, so upon its activation it can trigger another activator. This depends on the actual code inside the action function, but it allows for some very complex behaviors.

Each input/activator/action together makes a node. The user will be able to use these nodes to create the desired behavior.

The above system has been mostly implemented so far, with the exception of passing in or handling values, saving and loading the logic, and actual nodes. It works well for simple things like a keypress. One of the reasons that actions have to activate a target (predetermined) function on the module, instead actual in game functions that are required for the game to function is this: it limits the user to within the modules actual capabilities. ie: You cannot do something with the module unless it has been programmed to be able to do that. In this way you can controll the spacecraft however you like; your only limitation is its limitations. This makes sense, you wouldn't want someone changing, their max health, or making themselves indestructible, or other hacks.

.png)